WASHINGTON — This year in Detroit, crews working for the city’s public housing authority cut down a row of bushy trees that had shaded the entryways to two public housing units known as Sheridan I and II.

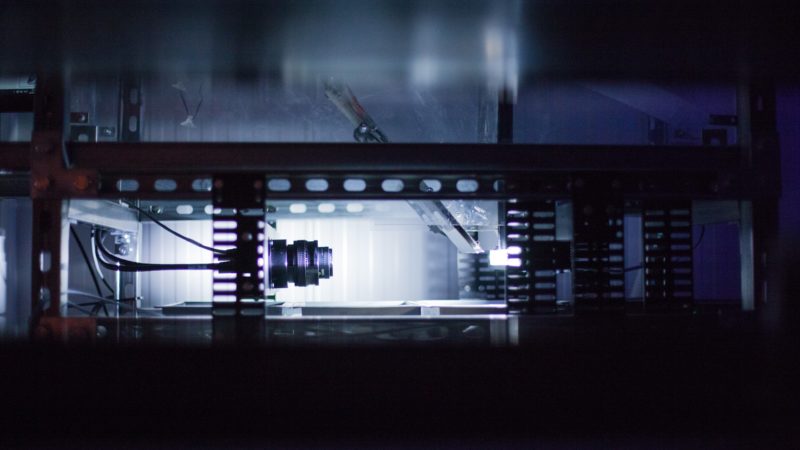

Their aim: to give newly installed security cameras an unobstructed view of the hulking, gray edifices, so round-the-clock video footage could be made available to the Detroit Police Department and its new facial recognition software whenever the Detroit Public Housing Commission files a police report.

“I think that police departments won’t make frivolous claims based solely on technology,” said Sandra Henriquez, the commission’s executive director. She added, “I think that they will use the technology as one tool that they use in bringing people into the criminal justice system.”

To critics of the widening reach of facial recognition software, such assurances are likely to ring hollow. As the software improves and as the price drops, the technology is becoming ubiquitous — on wearable police cameras, in private home security systems and at sporting events. Landlords are considering the technology as a replacement for their tenants’ key fobs, a visual check-in that could double as a general surveillance system.

But the backlash has already begun. San Francisco; Somerville, Mass.; and Oakland, Calif., all banned facial recognition software this year. And Congress is taking a look, worried that an unproven technology will ensnare innocent people while diminishing privacy rights.

“We can’t continue to expand the footprint of a technology and the reach of it when there are no guardrails for these emerging technologies to protect civil rights,” said Representative Ayanna S. Pressley, Democrat of Massachusetts, and a sponsor of the No Biometric Barriers to Housing Act, which would ban facial recognition systems in federally funded public housing. It would also require that the Department of Housing and Urban Development send to Congress a detailed report on the software.

At this point, the federal government does not regulate facial recognition software in any way, and HUD officials say they have no plans to create any regulations.

But the technology’s spread is raising serious concerns — on the political right as well as the left. The Chinese government’s use of a vast, secret system of advanced facial recognition technology to track and control its Uighur Muslim minority has set off international outrage. It has also demonstrated how functional the technology has become.

In one month this year, law enforcement in the central Chinese city of Sanmenxia, along the Yellow River, screened images of residents 500,000 times to determine if they were Uighurs.

“I haven’t broken any laws, but I get uncomfortable with the idea of the state being able to reconstruct not just my location, but who I was with, when I was there, and all that kind of thing,” said Klon Kitchen, who leads technology policy at the conservative Heritage Foundation.

“I know they can do that with metadata with just my phone,” Mr. Kitchen said, “but there is one more layer of ickiness that comes with, like — and they’ve got a picture of me.”

The issues go beyond privacy concerns. The use of facial recognition software may be racing ahead of the technology’s actual efficacy. Amazon’s facial recognition tool incorrectly identified 28 Congress members as other people who have been arrested, according to a 2018 test conducted by the American Civil Liberties Union. The technology especially struggles to identify people of color and women, according to a paper published last year by researchers from the Massachusetts Institute of Technology and Stanford University.

Yet those are the people most subject to the technology’s early deployment. Detroit is nearly 80 percent African-American.

Detroit officials created what it called Project Green Light in 2016 to fight crime, partnering with the Police Department, businesses and community groups to install cameras across the city. Facial recognition software has since been added to the program.

“It feels like a badge of shame to say, ‘This is a neighborhood that you shouldn’t venture into,’” said Tawana Petty, the director of the data justice program for the Detroit Community Technology Project, which opposed Project Green Light. She is also a lifelong Detroit resident who is black.

“It’s disheartening that money that could be used for adequate transportation, for affordable water, for adequate infrastructure and schools, is being used for surveillance,” she said.

“It feels like digital redlining; that people are being relegated to particular neighborhoods and identified in particular ways because those cameras exist,” she added.

The Project Green Light cameras have been up and running on the two Sheridan public housing buildings for about a month. The Detroit Police Department has yet to pull any of the footage, Ms. Henriquez said.

Ms. Henriquez said she plans to bring the Project Green Light cameras to other public housing buildings, once funding is figured out.

“I’m willing to believe, and I’m confident, that if there are errors made,” she said, “they are going to be minimal and that there are checks and balances in place that police departments will use so that they don’t arrest the wrong person.”

Representative Rashida Tlaib, the freshman Democrat whose district includes much of Detroit, does not accept that.

“My city got scammed to believe that facial recognition is somehow going to keep us safer, and it hasn’t,” said Ms. Tlaib, who is a sponsor of the anti-facial recognition legislation.

“You got scammed into buying a broken system,” she added, “and you implement it in a black city.”

Though Mr. Kitchen said he recognized the privacy and accuracy concerns, he added that he also saw the benefits of the technology in residential security.

“You can imagine if you live in an apartment complex, the landlord says, ‘Hey, listen, we’re rolling out a new initiative. We are paying for data access into registered sex offenders. If you would like to be notified when a registered sex offender is picked up on our cameras, and they’re in the neighborhood, you can do that,’” he said. “I could imagine some residents saying, ‘Oh, my gosh, that’s a huge feature. Yes, please let me know that.’”

He could also see a scenario where a police officer identified someone as a criminal suspect, only to be corrected by the technology. The software, if working well, could actually prevent clashes between innocent citizens and law enforcement.

Ms. Henriquez agreed.

“If I’m a victim or a family member or a friend is a victim of a crime, a shooting, something really awful,” she said, “I guess I would be inclined to want the police to have every tool legally possible to solve that crime.”

To detractors in Congress, such benefits seem too theoretical when the potential drawbacks are more immediate. The House bill to ban facial recognition software in public housing would also require that HUD submit a report to Congress outlining where the technology is used, its effect, the purpose, the demographics of the people living in the units where the technology is used, and the potential effect of it on vulnerable communities.

“This technology is untested, and is biased, and would only criminalize vulnerable communities, and result in greater surveillance and racial profiling,” Ms. Pressley said at a recent meeting in Somerville, Mass.

Ben Ewen-Campen, a Somerville city councilor, started the petition to ban the software in the city. At the same community event, he said that cities were taking action to ban the software because “there’s been no state and federal action whatsoever, despite the fact that there is overwhelming and bipartisan support for regulations, guidelines, transparency, around the usage of facial recognition.”

“No one should have to sign away their civil rights, their privacy, to have a home,” Mr. Ewen-Campen said.

HUD officials say they have not seen any indication that facial recognition technology is being used in public housing at all. Housing authorities seeking money through the department’s emergency safety and security fund, which awards funding for security fencing, locks and cameras, have yet to ask for funding for the software.

Still, it is possible that some landlords are using it. Housing authorities are under local control and not necessarily under HUD’s scrutiny. Mr. Kitchen said facial recognition technology was going to expand.

And so will the clashes.

“Get ready, because we’re going to see lawsuits coming around the corner of people detained unlawfully because of this misidentification,” Ms. Tlaib predicted.