Facial Recognition Technology: How Does Facial Recognition Work? | Is Facial Recognition Technology the New Norm? – Built In

Scott Urban isn’t anti-technology, he merely prefers not to have it dominate his life. In discussing the reasoning behind this conscious uncoupling, the craftsman and longtime Chicago resident isn’t preachy or paranoid, just practical. When shopping at a store, for instance, he avoids self-checkout and uses cash. Instead of paying bills online, he writes checks. And he mostly gets around by bike — definitely no Uber. He is also, not surprisingly, no fan of smartphones. Doesn’t own one, doesn’t want one.

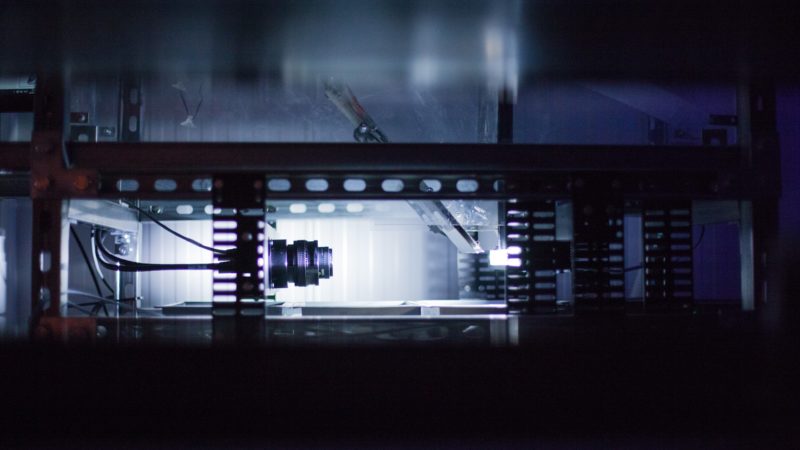

But Urban does own a computer, which he uses to email (via an encrypted service), surf the net (sans VPN, interestingly enough) and design a line of anti-facial-recognition eyewear dubbed Reflectacles with the help of CAD software. It’s a closely-related outgrowth of a business he has cultivated for 15 years: creating stylish custom eyeglass frames from wood, plastic and even bone for a loyal clientele, including high-profile customers like fashion designer Tory Burch, musician Reggie Watts and former “Top Chef” star Graham Elliot. It’s something he’d still be doing in earnest if his newish pursuit weren’t picking up steam thanks to the proliferation of facial recognition technology — and the media coverage that regularly, often negatively, documents its evolution. For the past few years, all his time has been devoted to Reflectacles.

“Realistically, it’s never going to be stopped,” Urban said of facial recognition tech. “So you have to adapt and move on.”

How Does Facial Recognition Work?

It’s mostly about facial geometry: the distance between eyes and the depth of each socket; nose width and jawline length. All of that comprises what’s known as a “facial signature.” Basic 2D imaging is very limited in its identification ability because flat images (like those lifted from a social media post) lack dimensionality, and it’s easily fooled by shadows and angles. More advanced, and increasingly common, 3D imaging employs a Spidey-like web of infrared light, each point of which marks a different facial characteristic. That light is then beamed back to the camera, and special software takes it from there, using algorithms to transform a series of pixels into numerical code that’s ultimately compared to other coded countenances in a given database. The more varied and numerous the photos used to train those algorithms are, the more accurate the result. Less commonly and on a more micro level, a process called surface texture analysis creates a “skinprint” that takes into account the distance between pores, wrinkles and other characteristics.

As with paying cash and avoiding smartphones, Reflectacles (priced from $148-164, in case you’re wondering) are another way Urban attempts to thwart technological intrusiveness and preserve as much privacy as he can — for himself and for anyone else who’d rather not don a smothering Halloween mask or Juggalo paint. And they actually work, as he demonstrated using a small camcorder to shoot beams of infrared light at a couple of different demo pairs. True to his claim, the polycarbonate lenses with optical filters became nearly opaque.

Seated at a small table near the front door of his garden-level apartment that doubles as a workshop, Urban sported a bushy beard and wore a pair of his own spectacles, plus a heavy tan coat, a scarf and a winter hat. Because who needs heat in January when you can just bundle up? Every wall of the dimly lit space was covered by old paintings hung on exposed-brick. They were purchased on the cheap during numerous thrift store excursions, and Urban claimed some are quite valuable. There were also a rarely jabbed heavy bag (dusty gloves draped on top), decorative string instruments (including a banjo and a cigar-box guitar) and lots of other intriguing odds and ends he has amassed over the years. His playful Australian Shepherd Hurbert, also well-coated against the chill, toyed with a rubber chicken that squealed whenever it was chomped or squashed underfoot.

Nearby sat an ancient-looking CNC mill, a machine that essentially reverse-3D prints prototypes of Urban’s Reflectacles frames before they’re manufactured in greater numbers elsewhere. He has long used the same contraption to make his custom wood frames, as well as various prototypes, and it functions similarly in the creation of Reflectacles. Fashioned from a solid block of acetate, two of the models contain lenses that stymie the type of 3D infrared facial mapping used by many security cameras and newer iPhones. Those are dubbed “Phantom” and “IRPair.” Another, the white and showier Ghost, also reflects visible light and was the first in his lineup. Urban said about 90 percent of his customers live in the U.S. The remainder come from Europe, Canada, Scandinavian countries and, increasingly, Australia, where controversy has erupted over the proposed launch of a national facial recognition database.

Of course, Australia is hardly alone among countries where a backlash is building against technology that many critics say isn’t ready for wide deployment, or should never be used in any scenario at all.

The (significant) downside: Invasion of privacy, racial and gender bias

Where to begin. According to skeptics and virulent critics, facial recognition technology should be strictly regulated — or it is nothing but bad news. Implemented to varying degrees by companies and governments around the world, its risks and shortcomings are frequent fodder for unflattering media reports. Loss of privacy often tops the list of concerns, and it’s a big reason why several U.S. towns and municipalities on both coasts (including, most prominently, San Francisco) have banned its use by local government.

Opining about the subject for Fast Company, ACLU communications strategist Abdullah Hasan warned that we should think hard about what it would mean if governments had the ability “to identify who attends protests, political rallies, church, or AA meetings, allowing undetectable, persistent, and suspicionless surveillance on an unprecedented scale. In China, the government is already using face recognition surveillance to track and control ethnic minorities, including Uighurs. Protesters in Hong Kong have had to resort to wearing masks to trick Big Brother’s ever-watchful eye.”

At nearly the same time Hasan’s piece was published, a hearing on facial-recognition tech was held by members of the House Committee on Oversight and Reform. “It is clear that despite the private sector’s expanded use of technology, it’s just not ready for primetime,” Oversight chair Carolyn Maloney said, according to a report on Nextgov.com. “Despite these concerns, we see facial recognition technology being used more and more in our everyday lives.” The solution, Maloney and her fellow committee members decided, was to hit the pause button until further safeguards — such as “new mechanisms for real consent, not just notice equals consent” — can be put in place.

Around the same time Hasan’s Fast Company piece ran in mid-January, the New York Times published a lengthy and widely read investigative story titled, “The Secretive Company That Might End Privacy as We Know It.” Its subhead was equally ominous: “A little-known start-up helps law enforcement match photos of unknown people to their online images — and ‘might lead to a dystopian future or something,’ a backer says.” The company in question, Clearview AI, supposedly has “scraped” three billion photos from social media sites to form its database. And it wasn’t immediately forthcoming with information.

“While the company was dodging me, it was also monitoring me,” reporter Kashmir Hill wrote. “At my request, a number of police officers had run my photo through the Clearview app. They soon received phone calls from company representatives asking if they were talking to the media — a sign that Clearview has the ability and, in this case, the appetite to monitor whom law enforcement is searching for.”

Likely prompted by the Times story, a privacy advocacy group called the Electronic Privacy Information Center drafted a letter that was signed by 40 groups ranging from the Algorithmic Justice League to World Farmers.

“While we do not believe that that improved accuracy of facial recognition would justify further deployment,” a portion of the letter reads, “we do believe that the obvious problems with bias and discrimination in the systems that are currently in use is an additional reason to recommend a blanket moratorium.”

And this: “There is also growing concern that facial recognition techniques used by authoritarian governments to control minority populations and limit dissent could spread quickly to democratic societies.”

“Are we factoring in fairness as we’re developing systems?”

According to a recent story in the Chicago Sun-Times, the Chicago Police Department’s “use of facial recognition technology didn’t need approval from the City Council and there have been no public hearings on its use. There’s also limited federal oversight over how law enforcement agencies use facial recognition, meaning the CPD and other departments are largely left to police themselves.”

In early February, Chicago mayor Lori Lightfoot was urged to scrap the technology, which is made by New York-based Clearview and which was described during a press conference as threatening to “the civil rights, due process and civil liberties of everyone” in the city.

That’s because in addition to privacy concerns, there are proven racial and gender bias problems with facial recognition tech, which is far more apt to misidentify minorities and women in one-on-one matches than it is white males — particularly middle-aged white males. It’s what a recent study by the National Institute of Standards and Technology (NIST) referred to in science-speak as “demographic differentials in the majority of contemporary face recognition algorithms that we evaluated.”

Joy Buolamwini, an Ghanaian-American computer scientist and self-described “poet of code,” said in a 2016 Ted Talk that “more inclusive code” (what she terms “incoding”) might be one solution — or at least the start of a solution — to this technological skewing. “[W]e’ve seen that algorithmic bias doesn’t necessarily always lead to fair outcomes,” she said during her presentation. “So what can we do about it? Well, we can start thinking about how we create more inclusive code and employ inclusive coding practices. It really starts with people. So who codes matters. Are we creating full-spectrum teams with diverse individuals who can check each other’s blind spots on the technical side… Are we factoring in fairness as we’re developing systems?”

Last August, as part of a multi-pronged criminal justice plan titled “Justice and Safety for All,” Democratic U.S. presidential candidate Bernie Sanders called for a nationwide ban on governmental use. Max Read, a journalist who covers technology for New York Magazine, is of a similar mindset:

“Time and time again, we’ve seen that the full negative implications of a given technology — say, the Facebook News Feed — are rarely felt, let alone understood, until the technology is sufficiently powerful and entrenched, at which point the company responsible for it has probably already pivoted into some complex new change,” he wrote in a recent essay. “Which is why we should ban facial-recognition technology. Or, at least, enact a moratorium on the use of facial-recognition software by law enforcement until the issue has been sufficiently studied and debated. For the same reason, we should impose heavy restrictions on the use of face data and facial-recognition tech within private companies as well. After all, it’s much harder to move fast and break things when you’re not allowed to move at all.”

Facial recognition improves safety — or does it?

Despite its pitfalls and limitations, however, facial-recognition tech still has plenty of proponents. Many of them are in law enforcement, where some degree of crook-catching advantage is better than none — regardless, it seems, of how underdeveloped and potentially flawed the source of that advantage is proven to be. The FBI can use facial recognition to sift through millions of driver’s license photos. Last August, the New York City Police Department used the tech to catch an alleged rapist. A suspect in a child exploitation video was reportedly caught as well — by matching his unclear face with a photo that showed him in the background at a gym.

Other police departments, like Chicago’s, use facial recognition to run mugshots, but not (they claim) for live surveillance. However, they reportedly have the capability to do so. According to a Wired story from May 2019, both Chicago and Detroit own cameras that can, with a software assist, live-monitor “every face passing by.”

“With few rules in place,” the article went on, “how Chicago and Detroit’s facial recognition technology affects police work—and the safety and rights of residents—will depend on the procedures the two cities impose on themselves.”

The amount of transparency (and lack thereof) around police use of facial recognition technology varies widely and can affect the outcome of criminal cases. According to a 2019 NBC News report: “Police praise the technology’s power to improve investigations, but many agencies also try to keep their methods secret. In New York, the police department has resisted attempts by defense attorneys and privacy advocates to reveal how its facial recognition system operates. In Jacksonville, Florida, authorities have refused to share details of their facial recognition searches with a man fighting his conviction for selling $50 of crack. Sometimes people arrested with the help of facial recognition aren’t aware that it was used against them.”

That’s not great. Then again, the same report also quoted an enthusiastic Florida sheriff. Using facial recognition, he said, his investigators have solved a variety of crimes — including bank robberies and missing persons cases — that “we otherwise wouldn’t have solved.”

The tech was used by the City of San Diego for seven years before it was shut down on Dec. 31, 2019. Consequently, a database containing tens of thousands of face scans that could be compared to nearly two million mugshots was no longer accessible by numerous law enforcement groups. Members of the San Diego Police Department were especially chagrined. “We should put restrictions on technology with a clear set of rules,” San Diego Police Officers Association president Jack Schaeffer told Fast Company. “There are a lot of situations where facial recognition could help let us know who our suspects might be in a series of crimes, or inform us about a threat of violence…. I really am reluctant about a moratorium.”

Over in Washington County, Oregon, software from Amazon called Rekognition has purportedly resulted in arrests “on multiple levels,” according to the county’s public information officer Jeff Talbot — including one for the shoplifting of a $12 gas can.

“Are we solving 500 crimes a year using this technology?” Talbot asked in an interview with CNET. “Absolutely not. But do we think this is an important piece of technology that we should be utilizing while doing it responsibly? Absolutely.”

Achieving the holy facial recognition trifecta of security, privacy and convenience is a primary focus at StoneLock — at least according to the biometric security company’s vice president of sales Yanik Brunet. Its product, the StoneLock Pro, purportedly scans faces without capturing or storing personal identifiable information. Moreover, Brunet said, only five percent of data collected is actually used.

Not long ago, though, StoneLock found itself at the center of a news-making kerfuffle when a New York City landlord wanted to install the company’s facial recognition system throughout one of his housing developments. In March of 2019, lawyers for 134 tenants argued against it. Not only would the addition of facial recognition tech do nothing to enhance security, they contended, it would creep out residents and guests. A couple of months later, those same tenants filed a legal complaint to block the landlord from applying to use StoneLock’s product. Federal legislation to ban facial recognition tech from New York public housing was introduced soon thereafter, and the city’s law enforcement community continues to use it, but no firm regulations have been established.

“We can’t allow invasive surveillance to become the norm in our public spaces.”

The education sector is adopting this technology as well. Earlier this year, several hundred miles upstate from NYC, facial recognition cameras were installed at schools in the town of Lockport. Safety, proponents insisted, was the main concern. Predictably, a hubbub ensued. “Subjecting 5-year-olds to this technology will not make anyone safer, and we can’t allow invasive surveillance to become the norm in our public spaces,” Stefanie Coyle, deputy director of the Education Policy Center for the New York Civil Liberties Union, told the New York Times. “Reminding people of their greatest fears is a disappointing tactic, meant to distract from the fact that this product is discriminatory, unethical and not secure.”

On the medical front, facial recognition software appears to hold some promise in helping to identify rare genetic disorders. The authors of a 2019 study published in the journal Nature describe “a facial image analysis framework” called DeepGestalt that employs computer vision and deep-learning algorithms to “quantif[y] similarities to hundreds of syndromes.”

As summarized in breakdown of the study by The Verge, “When tasked with distinguishing between pictures of patients with one syndrome or another, random syndrome, DeepGestalt was more than 90 percent accurate, beating expert clinicians, who were around 70 percent accurate on similar tests. When tested on 502 images showing individuals with 92 different syndromes, DeepGestalt identified the target condition in its guess of 10 possible diagnoses more than 90 percent of the time.”

But opponents say the technology is biased against older individuals and non-Caucasians. One medical professional, Dr. Bruce Gelb of the Icahn School of Medicine at Mount Sinai, wondered why the study was done at all. “It’s inconceivable to me that one wouldn’t send off the panel testing and figure out which one it actually is,” he said.

Where facial recognition is concerned, be it for health care or security, wariness abounds.

Brunet, for one, doesn’t discount concerns many people have about the technology, whether it’s StoneLock’s or someone else’s. He said he understands the need for and right to privacy, and he knows what an important issue this is.

“I don’t have anything to hide. I just don’t want to be tracked.”

“I think legislation is very good in this space,” he said. “I believe wholeheartedly in it and I think we need to protect people using the technology. And I’m saying that because I feel we’re on the right side of that question.”

Going forward, he added, more education on the subject is key to allaying fears. Because widespread adaptation of facial recognition tech is coming, whether we like it or not. Someday, he mused, using a plastic security badge will seem as antiquated as driving to a movie rental store does now. Forget fingerprints. In more and more situations, our faces will be our passports.

Scott Urban, however, intends to remain an outlier. Giving people the ability to evade this fast-evolving technology is more than just a way to pay his rent and keep Hurburt in rubber chickens. It’s also a tool for preserving privacy in a world where identities are increasingly leveraged for profit and worse. The only benefit of facial recognition he can think of is, maybe, added convenience. But that’s greatly outweighed by its intrusiveness.

“I don’t have anything to hide. I just don’t want to be tracked,” he told another publication late last month. “I also get card-counters who want to not be kicked out of casinos as fast as usual.”

Because in blackjack, as in everyday life, sometimes the system just begs to be gamed.