As face-recognition technology spreads, so do ideas for subverting it – The Economist

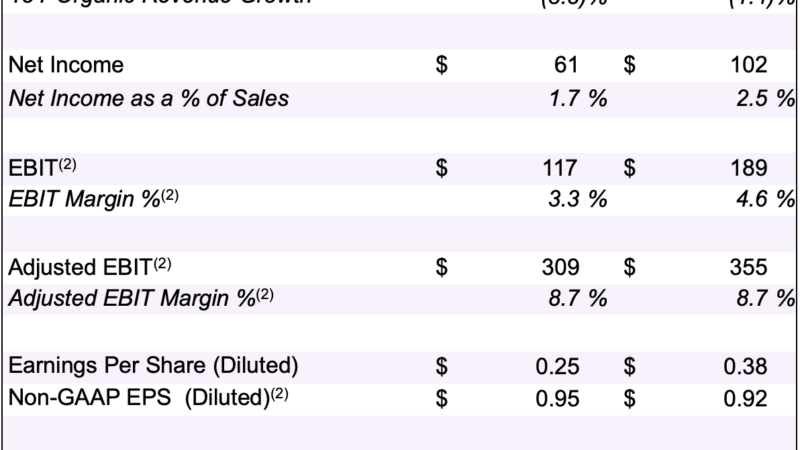

POWERED BY advances in artificial intelligence (AI), face-recognition systems are spreading like knotweed. Facebook, a social network, uses the technology to label people in uploaded photographs. Modern smartphones can be unlocked with it. Some banks employ it to verify transactions. Supermarkets watch for under-age drinkers. Advertising billboards assess consumers’ reactions to their contents. America’s Department of Homeland Security reckons face recognition will scrutinise 97% of outbound airline passengers by 2023. Networks of face-recognition cameras are part of the police state China has built in Xinjiang, in the country’s far west. And a number of British police forces have tested the technology as a tool of mass surveillance in trials designed to spot criminals on the street.

A backlash, though, is brewing. The authorities in several American cities, including San Francisco and Oakland, have forbidden agencies such as the police from using the technology. In Britain, members of parliament have called, so far without success, for a ban on police tests. Refuseniks can also take matters into their own hands by trying to hide their faces from the cameras or, as has happened recently during protests in Hong Kong, by pointing hand-held lasers at CCTV cameras. to dazzle them (see picture). Meanwhile, a small but growing group of privacy campaigners and academics are looking at ways to subvert the underlying technology directly.

Face recognition relies on machine learning, a subfield of AI in which computers teach themselves to do tasks that their programmers are unable to explain to them explicitly. First, a system is trained on thousands of examples of human faces. By rewarding it when it correctly identifies a face, and penalising it when it does not, it can be taught to distinguish images that contain faces from those that do not. Once it has an idea what a face looks like, the system can then begin to distinguish one face from another. The specifics vary, depending on the algorithm, but usually involve a mathematical representation of a number of crucial anatomical points, such as the location of the nose relative to other facial features, or the distance between the eyes.

In laboratory tests, such systems can be extremely accurate. One survey by the NIST, an America standards-setting body, found that, between 2014 and 2018, the ability of face-recognition software to match an image of a known person with the image of that person held in a database improved from 96% to 99.8%. But because the machines have taught themselves, the visual systems they have come up with are bespoke. Computer vision, in other words, is nothing like the human sort. And that can provide plenty of chinks in an algorithm’s armour.

In 2010, for instance, as part of a thesis for a master’s degree at New York University, an American researcher and artist named Adam Harvey created “CV [computer vision] Dazzle”, a style of make-up designed to fool face recognisers. It uses bright colours, high contrast, graded shading and asymmetric stylings to confound an algorithm’s assumptions about what a face looks like. To a human being, the result is still clearly a face. But a computer—or, at least, the specific algorithm Mr Harvey was aiming at—is baffled.

Dramatic make-up is likely to attract more attention from other people than it deflects from machines. HyperFace is a newer project of Mr Harvey’s. Where CV Dazzle aims to alter faces, HyperFace aims to hide them among dozens of fakes. It uses blocky, semi-abstract and comparatively innocent-looking patterns that are designed to appeal as strongly as possible to face classifiers. The idea is to disguise the real thing among a sea of false positives. Clothes with the pattern, which features lines and sets of dark spots vaguely reminiscent of mouths and pairs of eyes (see photograph), are already available.

An even subtler idea was proposed by researchers at the Chinese University of Hong Kong, Indiana University Bloomington, and Alibaba, a big Chinese information-technology firm, in a paper published in 2018. It is a baseball cap fitted with tiny light-emitting diodes that project infra-red dots onto the wearer’s face. Many of the cameras used in face-recognition systems are sensitive to parts of the infra-red spectrum. Since human eyes are not, infra-red light is ideal for covert trickery.

In tests against FaceNet, a face-recognition system developed by Google, the researchers found that the right amount of infra-red illumination could reliably prevent a computer from recognising that it was looking at a face at all. More sophisticated attacks were possible, too. By searching for faces which were mathematically similar to that of one of their colleagues, and applying fine control to the diodes, the researchers persuaded FaceNet, on 70% of attempts, that the colleague in question was actually someone else entirely.

Training one algorithm to fool another is known as adversarial machine learning. It is a productive approach, creating images that are misleading to a computer’s vision while looking meaningless to a human being’s. One paper, published in 2016 by researchers from Carnegie Mellon University, in Pittsburgh, and the University of North Carolina, showed how innocuous-looking abstract patterns, printed on paper and stuck onto the frame of a pair of glasses, could often convince a computer-vision system that a male AI researcher was in fact Milla Jovovich, an American actress.

In a similar paper, presented at a computer-vision conference in July, a group of researchers at the Catholic University of Leuven, in Belgium, fooled person-recognition systems rather than face-recognition ones. They described an algorithmically generated pattern that was 40cm square. In tests, merely holding up a piece of cardboard with this pattern on it was enough to make an individual—who would be eminently visible to a human security guard—vanish from the sight of a computerised watchman.

As the researchers themselves admit, all these systems have constraints. In particular, most work only against specific recognition algorithms, limiting their deployability. Happily, says Mr Harvey, although face recognition is spreading, it is not yet ubiquitous—or perfect. A study by researchers at the University of Essex, published in July, found that although one police trial in London flagged up 42 potential matches, only eight proved accurate. Even in China, says Mr Harvey, only a fraction of CCTV cameras collect pictures sharp enough for face recognition to work. Low-tech approaches can help, too. “Even small things like wearing turtlenecks, wearing sunglasses, looking at your phone [and therefore not at the cameras]—together these have some protective effect”. ■