A JUICE-y look at Jupiter from Earth – SYFY WIRE

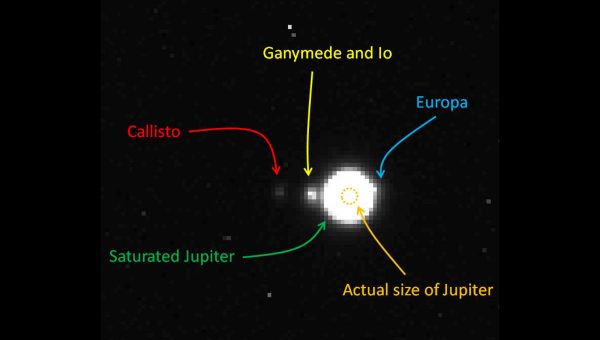

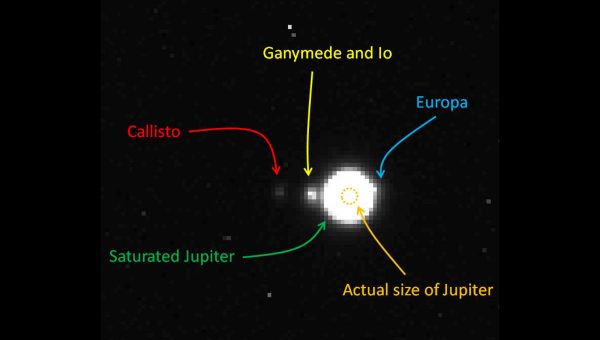

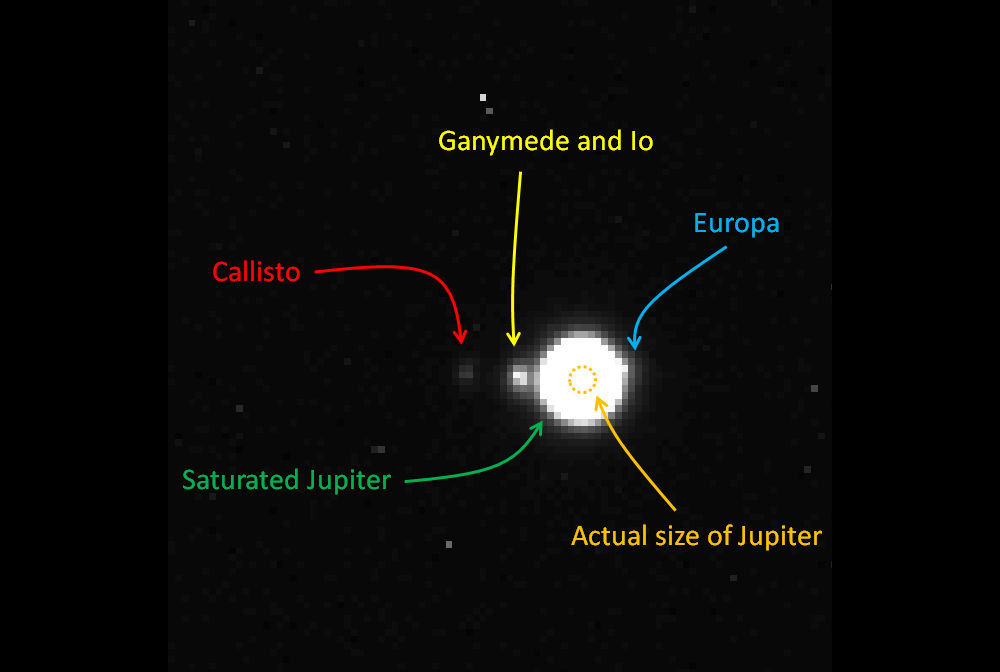

An image of Jupiter and its four big moons taken from Earth by the NavCam on the Jupiter ICy moons Explorer (JUICE), set to launch in 2022. Credit: Airbus Defence and Space

Contributed by

Sep 3, 2019

In 2022, the European Space Agency will launch the Jupiter ICy moons Explorer — — toward the innermost of the gas giants. It’ll take more than seven years to get there, and once it arrives it’ll go on a series of looping orbits to get close-up views of the big Galilean moons of Jupiter, something we haven’t seen in many years.

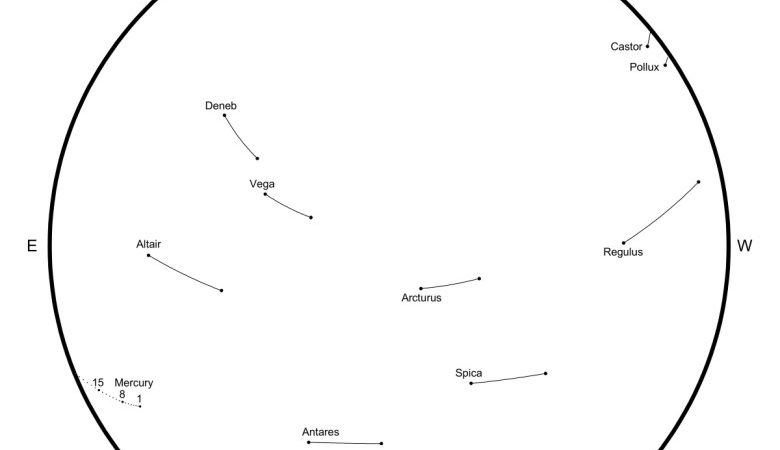

Missions like this need a lot of prep and testing, and the scientists/engineers for JUICE just did something I think I love: They hauled the Navigation Camera — the instrument used to looks at stars and such and determine where the spacecraft is and where it’s pointed — onto the roof of Airbus Defence and Space site in Toulouse, France, put it on a telescope mount, and .

I have never heard of this being done for a spacecraft camera before! It’s a funny idea. So, what targets did they choose? Well, when your destination is Jupiter and it’s a big bright light in the southern sky right now, what would you choose?

Here’s the image they got:

An image of Jupiter and its four big moons taken from Earth by the NavCam on the Jupiter ICy moons Explorer (JUICE), set to launch in 2022. Credit:

Ha! That’s so cool! I mean, I know it’s not the spectacular views we’ve come to expect from spacecraft cameras, but give it a break: We’re over 700 million kilometers from Jupiter! By the time the camera is used for real it’ll be substantially closer to the planet. As it stands, the shot it took is about the same view you’d get through a good set of binoculars.

is overexposed since they were looking for the moons and background stars, but you can see (though Europa and Io were very close together as seen from Earth at the time).

You can read more about all this at the link above. One reason they did this is to test the software to make sure it can find the targets it needs and figure out where the centers of these objects are. That’s important! If an object were exactly a single pixel in size in the detector that would be pretty easy, but in general they’re bigger. Finding the centers of extended objects, ones covering multiple pixels, can be a little bit tricky. This is called the centroid, and if the object is bright on one side and faint on the other, or weirdly shaped, or too bright for the detector, the software has to be smart enough to deal with that. Once the centers are found, the observed objects can be checked against maps and identified, and then the spacecraft can use that info to point where it needs to point or get to where it needs to go.

Reading the link, I had a flashback to when I was working on STIS, a camera on Hubble. We had a whole bunch of different things the camera could do, including using a very tiny slit in front of the camera to block out all extraneous light from the sky except the target. But how do you point the telescope accurately enough to get that target centered in such a tiny slit? The tolerances were incredibly tight.

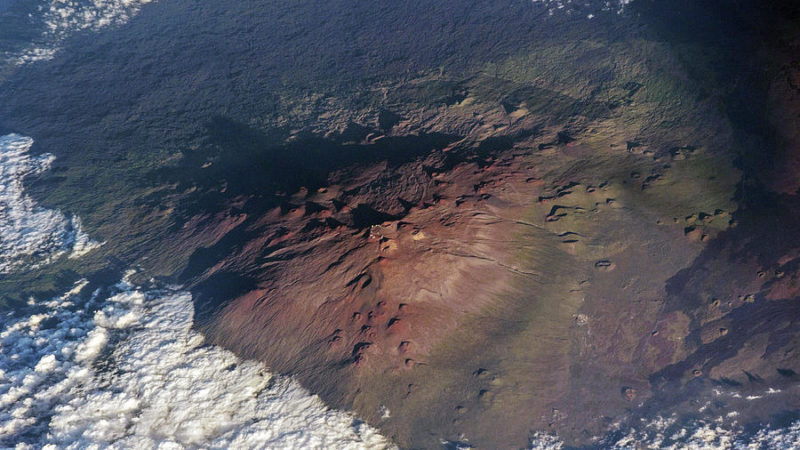

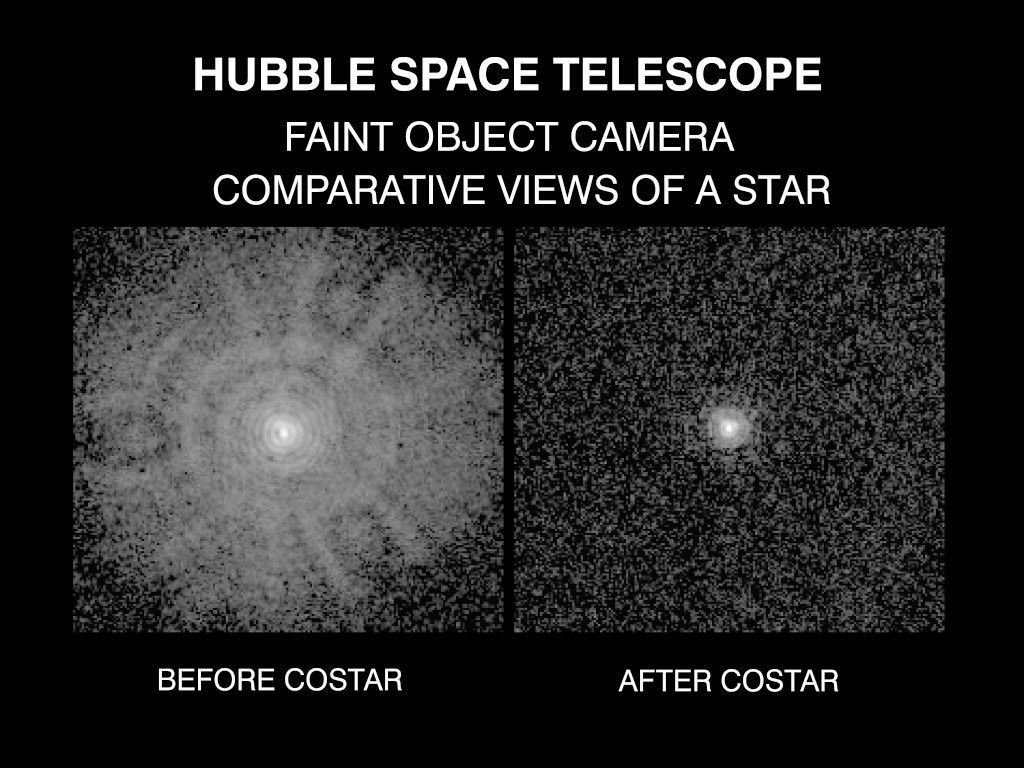

An extreme example of how light from a star gets spread out by telescope optics. On the left is a star seen through a camera on Hubble before the optics were corrected; on the right is the same star after the correction. Credit:

I wrote software to simulate this. You’d point to where you think the target is and take a short exposure. Then the ‘scope would move in a pattern (say, a grid), placing the target in different spots. The light from any target is spread out due to telescope optics (or it being an extended source like a galaxy), so every time you take an exposure you’re seeing a different part of that spreads-out light. Assuming you know the actual way the light is spread out, you can then figure out where the center of the light pattern is, which is presumably the target.

I wrote the code to try a bunch of different patterns and telescope movements to minimize the amount of time spent to move Hubble (that’s time you’re not observing) and to see how spread out we could make the observations in space and still get the target centered.

It was a fun puzzle to tackle, and in the end the software did a pretty good job… in simulation. But from what I heard later the actual on-board software used was based on what I wrote, so that was cool, and I know it worked because it got used many times in real observations.

The JUICE NavCam was being tested in a similar way here, but they got to test it on their actual destination in the sky! 1995 me is very jealous of that. Present me is just glad they got the chance to have some fun with their camera before sending it on its billion-kilometer journey. I can’t wait to see what lies at the destination!