How HR can adopt HR technology in the workplace – Human Resource Executive®

When it comes to using artificial intelligence in hiring, tread carefully. Hogan Assessments shares the secrets of how to avoid the AI trap.

BY TOM STARNER

Everyone, it seems, has a hard time staying away from shiny new toys these days. When it comes to emerging technologies like artificial intelligence and machine learning, the pull seems to be exceptionally strong.

Undeniably, those impressive tech tools are making serious progress in so many ways, including mastering complex games like chess and poker versus humans. Yet, as a couple of recent newsworthy cautionary tales demonstrate, AI and ML are far from a panacea. In fact, what buyers are promised and what they receive may not quite be in sync.

In the first case, a recent report in the New York Times focused on a well-funded Silicon Valley start-up, One Concern, that promised its AI-based platform could be used to pinpoint exactly where people in need of help could be located during disasters such as earthquakes or hurricanes. On paper, it sounded great, and the company scored some serious investment dollars and new clients. Problem was, when users gave it a whirl, the results fell short. The Times reported that, when Seattle’s Office of Emergency Management checked out a simulated earthquake on One Concern’s platform, troubling inconsistencies arose. For instance, many large buildings that would be vulnerable didn’t appear on the AI-generated map. Not good when you are trying to save lives.

And, while the pre-employment assessment process certainly is no life or death scenario (depending on the job, that is), getting it wrong can, at a minimum, lead to higher attrition rates, lower productivity or other negative financial outcomes for employers looking to stay competitive.

Along those lines, the second example in the news, reported by the Washington Post, described a new service from a company called Predictim that claimed to help people find the perfect babysitter. To do that, the service’s AI technology scans the would-be sitter’s entire social media profile (Facebook, Twitter and Instagram histories primarily), then uses recent advances in personality and data analytics to assess four “personality features”—propensity toward bullying/harassment, disrespectfulness/bad attitude, explicit content and drug abuse—that would weed out bad prospects. Unfortunately, that approach is limited when it comes to choosing the best person to care for your kids.

“At face value, this type of service has merit,” says Ryne Sherman, chief science officer at Hogan Assessment Systems in Tulsa, Okla. “However, these services ignore a long history of research showing that people strongly respond to incentives and will modify or even falsify their responses to succeed.”

And therein lies the rub, not just for Predictim but for any service offering to evaluate someone’s workplace potential on the basis of social media pages or other comparable data, according to Sherman.

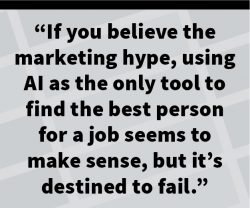

“If you believe the marketing hype, using AI as the only tool to find the best person for a job seems to make sense, but it’s destined to fail,” he says.

Sherman explains that, in the mid-2000s, many employers started using text-searching programs to quickly sift through resumes. This worked just fine until savvy applicants found ways to stuff their resumes with keywords. A simple tactic was to put loads of keywords typically used by employers to select candidates in their resume in a white font. When printed, the white font is undetectable to the human eye (on white paper, of course). However, the computers slogging through resumes picked up all of those keywords hidden on the resume, increasing the applicant’s chances of being selected.

Today, he adds, text-searching technology has gone beyond keyword-only searches to use natural language to weed out such strategies. However, the point is not about resume stuffing; instead, it’s that job applicants strongly respond to incentives and will try to trick or cheat the system to get selected.

Which, in turn, brings us back to choosing babysitters via social media analysis. Virtually all the research mentioned earlier that demonstrates that personality is linked with social media usage was performed in a context in which the people being studied had no incentive to be dishonest. However, when people know their social media profiles are being used to measure their intelligence and select them for jobs, they often will start “gaming” their social media profiles to beat the system. If there is an incentive for having a “clean” social media profile, people will do just that, Sherman explains, adding that many users already have both “professional” and “personal” Facebook accounts.

“Which account do you think they submit to a potential employer who asks? Which Instagram account will the potential babysitter send to parents?” Sherman asks.

With employers using social media records and AI-based systems to make personnel decisions, the stakes of social media use have become much larger. If employers continue to do this, services that specialize in creating sanitized social media accounts for job applicants will emerge. Sherman wonders, how will employers combat these services? How will firms that assess personality via social media know that the profile they are getting is the real “Risky Rebecca” and not the professionally cultivated “Responsible Rebecca?”

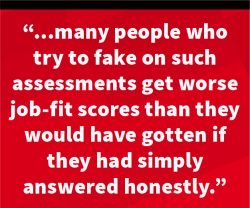

“Faking is a common problem in the personnel-selection industry, though traditional personality assessments based on questionnaires tackled this issue long ago,” he says. “Simply put, faking a questionnaire-based personality assessment is extremely difficult, and many people who try to fake on such assessments get worse job-fit scores than they would have gotten if they had simply answered honestly.”

Sherman adds that faking a social media-based personality assessment is much easier, as you just need to keep content positive and to a minimum. If AI-driven social media analysis companies cannot solve the faking problem, it will quickly put an end to their business model.

Promises, promises

“There are many promises from AI in the talent-assessment industry, but AI-driven assessments are easy to fool—akin to reading tea leaves, not actual personality traits,” Sherman says, noting that Hogan is using AI, but as an enhancement tool only.

“We’ve seen it work in practical settings, even here at Hogan,” he says. “In fact, we revised our algorithms based on what we would call AI or machine-learning models. And they’ve improved our ability to select high performers by about 20%, in certain job categories.”

He also cites some marketing hype from one of the companies purporting to offer an AI-based solution as the only means to land top talent. The come on: “We have applied proven neuroscience games and cutting-edge AI to reinvent the way companies attract, select and retain talent.”

“We’re using AI, but I wouldn’t say we’re reinventing anything. We’re just making a high-quality product even better,” he says.

Ryan Ross, managing partner at Hogan, readily admits that technology has enhanced the talent-assessment business, but the more AI and ML extract the human element from the process, the less effective they become.

“It’s night and day now, because today we’re delivering quality assessments 24/7 in 48 different languages. You can’t do that without technology,” Ross says. “What’s really interesting, though, is some of the AI and gamification we see is much like the old saying of using a hammer to kill a mosquito.

“In many cases, some are applying this emerging technology in ways that, yes, it’s very cool, but is it productive and does it get us where we want to be?” he adds. “I would say those applications are interesting, but they are not going to achieve that goal.”

Sherman says many people today see AI and ML as if they are some sort of revolution in the assessment business, but that’s simply not the case. Helpful? Yes. A revolution? Nope.

“We’ve been using linear regression for more than 30 years to build profiles, and these AI-based solutions are just fancier versions,” he says. “That’s why we see a little bit of improvement because they’re a little bit more robust. The computing power has improved.

“For now,” he says, “the future of using AI in our business is unclear, but there is potential and that’s where we operate.”

![]()